Authors

Tom Williams

Venue

1st International Workshop on Virtual, Augmented, and Mixed Reality for HRI

Publication Year

2018

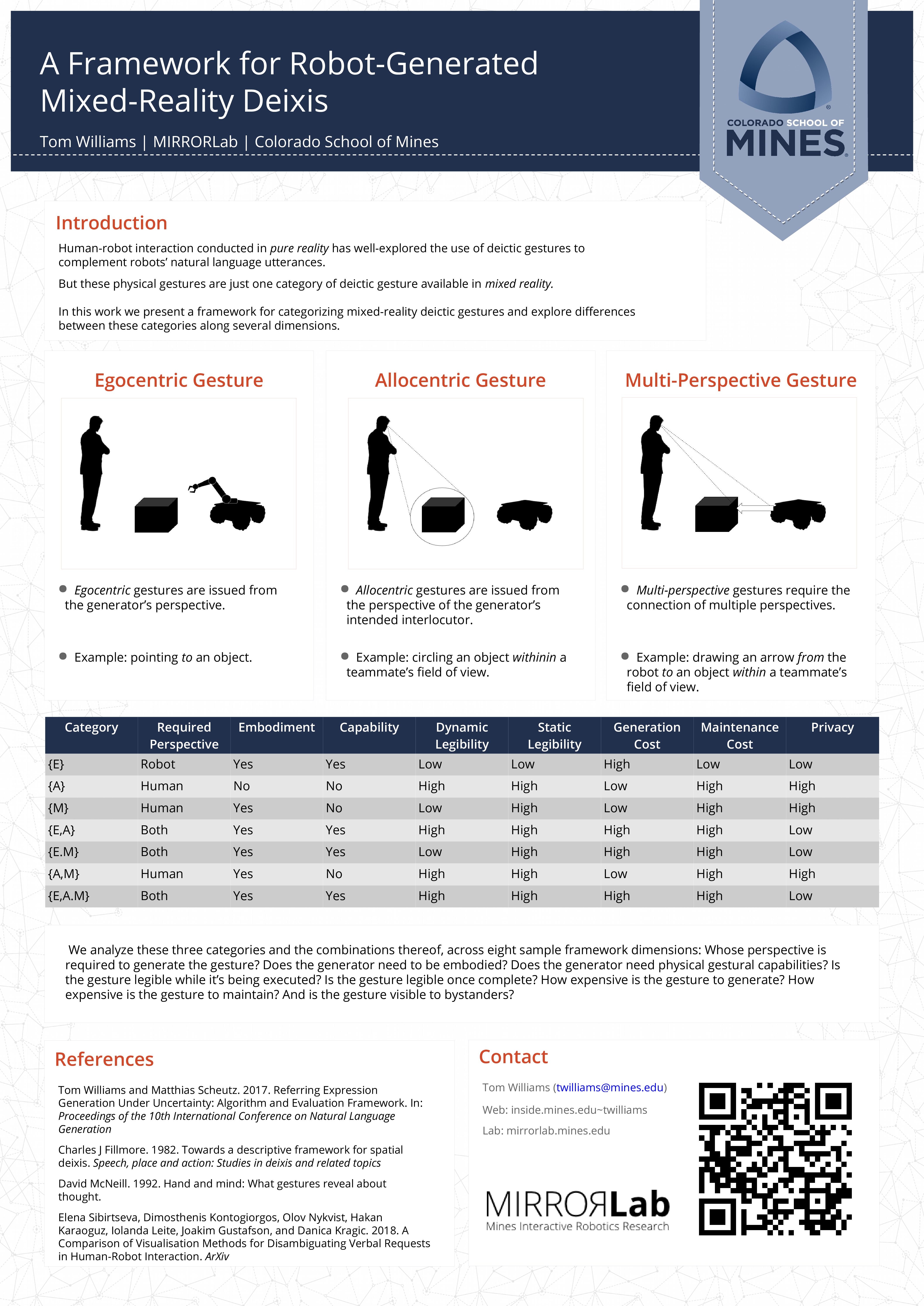

Language-capable robots interacting with human teammates may need to make frequent reference to nearby objects, locations, or people. In human-robot interaction, such references are often accompanied by deictic gestures such as pointing, using human-like arm motions. However, with advancements in augmented reality technology, new options become available for deictic gesture, which may be more precise in picking out the target referent, and require less energy on the part of the robot. In this paper, we present a conceptual framework for categorizing different types of mixed-reality deictic gestures that may be generated by robots in human-robot interaction scenarios, and presented an analysis of how these categories differ along a variety of dimensions.